CCC supported three scientific sessions at this year’s AAAS Annual Conference, and in case you weren’t able to attend in person, we will be recapping each session. This week, we will summarize the highlights of the session, “Generative AI in Science: Promises and Pitfalls.” In Part Two, we will summarize Dr. Markus Buehler’s presentation on Generative AI in Mechanobiology.

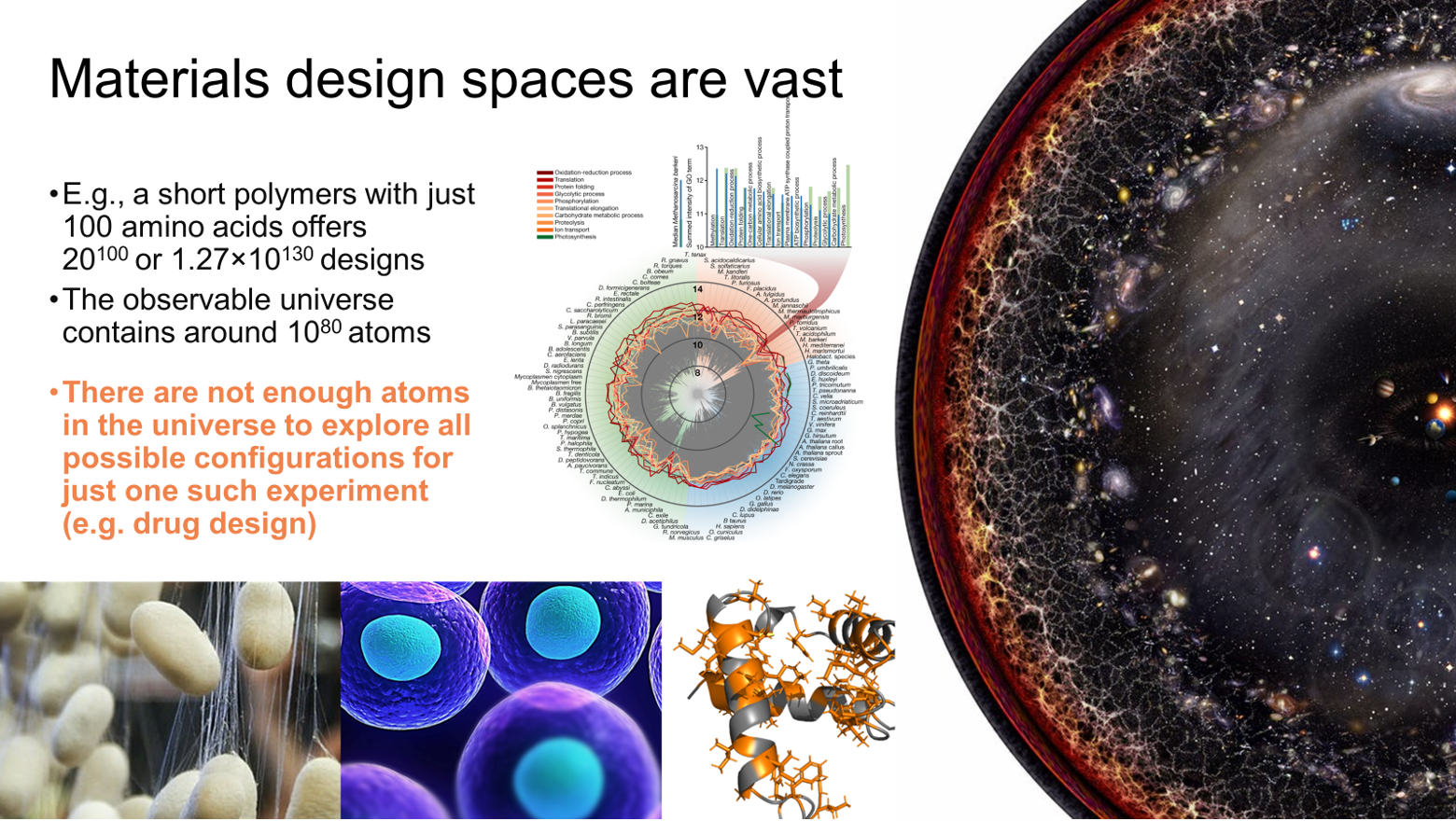

Dr. Markus Buehler began his presentation by addressing how generative models can be applied in the study of materials science. Historically in materials science, researchers would collect data or develop equations to describe how materials behave, and solve them with pen and paper. The emergence of computers allowed researchers to solve these equations much more quickly and treat very complex systems, for instance using statistical mechanics. For some problems, however, traditional computing power is not enough. For example, the image below depicts the number of possible configurations of a single small protein (20^100 or 1.27×10^130 designs). This amount of possible configurations is greater than the number of atoms in the observable universe (10^80 atoms) making this problem intractable for even the largest supercomputers.

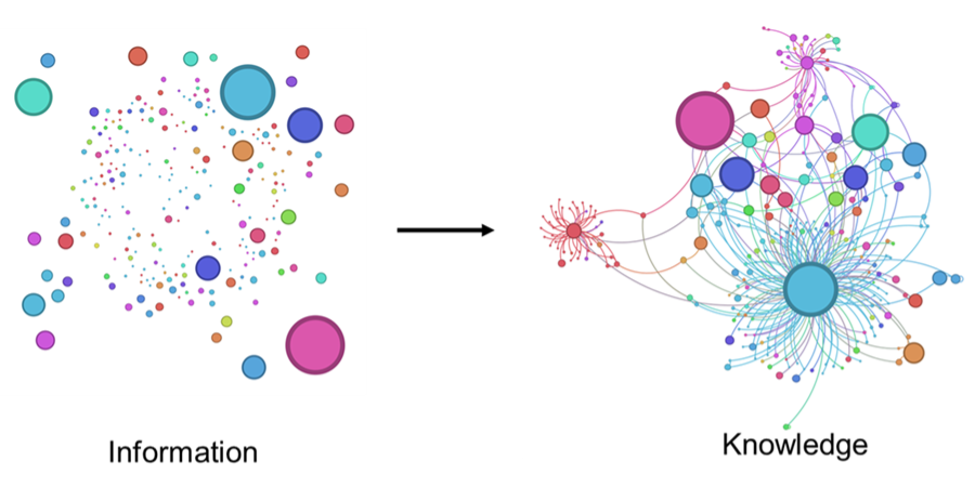

Before generative models, the equations and algorithms created by scientists were limited by a certain characteristic shared by all researchers since the beginning of time: humanity. “Generative AI allows us to go beyond the human imagination so we can invent and discover things that we have been unable to so far, either because we are not smart enough or because we don’t have the capacity to have access to every data point at the same time,” says Dr. Buehler. “Generative AI can be used to identify new equations and algorithms, and can solve these equations for us. Moreover, generative models can also explain to us how they developed and solved these equations, which, at high levels of complexity, is absolutely necessary for researchers to understand models’ ‘thought processes’.” A key aspect of how these models work is by translating information (e.g. results of measurements) into knowledge by learning a graph representation of it.

Source: M.J. Buehler, Accelerating Scientific Discovery with Generative Knowledge Extraction, Graph-Based Representation, and Multimodal Intelligent Graph Reasoning, arXiv, 2024, https://arxiv.org/abs/2403.11996

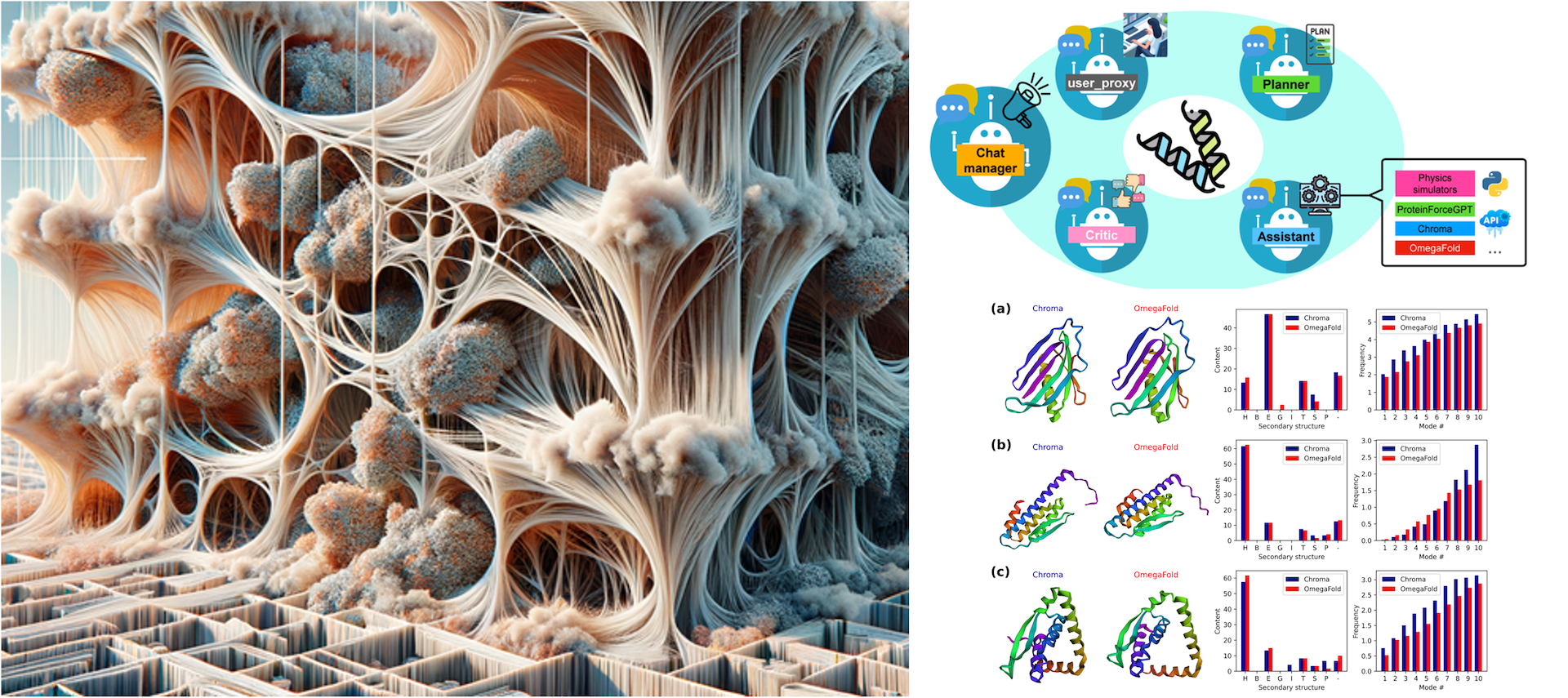

The figure below shows a new material design, a hierarchical mycelium-based composite, constructed from generative AI and featuring a never-before-seen combination of mycelium rhizomorphs, collagen, mineral filler, surface functionalization and a complex interplay of porosity and material.

Left: Mycrlium composite. Source: M.J. Buehler, Accelerating Scientific Discovery with Generative Knowledge Extraction, Graph-Based Representation, and Multimodal Intelligent Graph Reasoning, arXiv, 2024. https://arxiv.org/abs/2403.11996 Right: Protein design. Source: A. Ghafarollahi, M.J. Buehler, ProtAgents: Protein discovery via large language model multi-agent collaborations combining physics and machine learning, https://arxiv.org/abs/2402.

Furthermore, generative AI can help us visualize complex systems. Instead of describing interactions between atoms, AI can represent these interactions in graphs, which describe mechanistically how materials function, behave, and interact at different scales. These tools are powerful, but alone, they are not strong enough to solve the high complexity of these problems. To solve this, we can combine many models, such as a model that can do physics simulations and another that can predict forces and stresses and how to design proteins. When these models communicate they become agentic models, where each individual model is an agent with a specific purpose. The output of each model is communicated to the other models and considered in the overall evaluation of the models’ outputs. Agentic models can run simulations on existing data and generate new data. So for areas with limited or zero data, researchers can use physics models to generate data to run simulations on. “This type of modeling is one of the the future areas of growth for generative models,” says Dr. Buehler. These types of models can solve problems previously considered to be intractable on supercomputers, and some of these models can even run on a standard laptop.

One of the main challenges in designing such physics-inspired generative AI models that researchers are still addressing is how to build the models elegantly, and how to make them more similar to the human brain or biological systems. Biological systems have the ability to change their behavior, such as when you cut your skin, the cut will heal over time. Models can be built to act similarly. Instead of training a model to heal a cut at all times, we can train them to have the ability to reassemble them to act dynamically – in some sense, we train models to first think about the question asked and how they may be able to reconfigure ‘themselves’ to best solve a certain task. This can be used to make quantitative predictions (e.g. solve a highly complex task to predict the energy landscape of a protein), make qualitative predictions and reason over the results, and integrate different expertise and skills as answers to complex tasks are developed. Importantly, the models can also explain to us how they arrived at the solution, how a particular system works, and other details that may be of interest to the human scientist. We can then run experiments to predict and verify the results of these simulations for cases that are the most promising ideas, such as for materials design applications.

Dr. Buehler then spoke to specific applications of these generative models in materials science. “To calculate the energy landscape to solve the inverse folding problem given a certain protein, we don’t even need to know what the protein looks like, I just need to know the building blocks and DNA sequence that defines this protein and the conditions the experiment is conducted in. If you want a particular kind of protein with a certain energy landscape, we can also design that protein, on demand. Agentic models can do this because they have the capacity to combine different models, predictions and data. This can be used to synthesize complex novel proteins that don’t exist in nature. We can invent proteins that have super strong fibers as replacements for plastics, or create better artificial food, or new batteries. We can use nature’s toolbox to expand past what nature has to offer, and go far beyond evolutionary principles. For example, we can design materials for certain purposes, such as a material that is highly stretchy or has certain optical properties, or materials that change their properties based on external cues. The models that are emerging now are not only able to solve these problems, but also provide the capability to explain to us how these problems are solved. They can also elucidate why certain strategies work and others don’t. They can predict new research, such as asking a model to predict how a certain material will behave in great detail, and we can validate this with research studies in labs, or with physics simulations. This is mind-boggling, and sounds futuristic, but it is actually happening today.”

Thank you so much for reading! Click here to read the next blog in this four-part series, which recaps Dr. Duncan Watson-Parris’ presentation on Generative AI in the Climate Sciences.