When Tape Op's 420th issue rolls out in 2040, the way we record, edit, mix, and master audio will be transformed entirely from the processes we use today. Currently we are separated by physical barriers — big consoles, big speakers, large acoustically designed rooms, outboard racks, patching bays, monitor screens, mice, and keyboards. This is the "fourth wall" that remains between creators and the music. Within three decades, that wall will all but disappear. Audio engineering will become virtual and immersive.

TECHNOLOGY IMPROVEMENTS FOLLOW A PREDICTABLE TREND

Fifty years ago, people thought Alan Turing was crazy. The father of algorithmic computing, Turing predicted that computers would employ around one gigabit of storage by the turn of the century. He was right. In 1965, Gordon Moore famously speculated that the number of transistors on an integrated circuit (IC) would double every two years. He was right too, if a tad conservative. We've since learned that virtually every technology follows a similarly predictable growth slope.

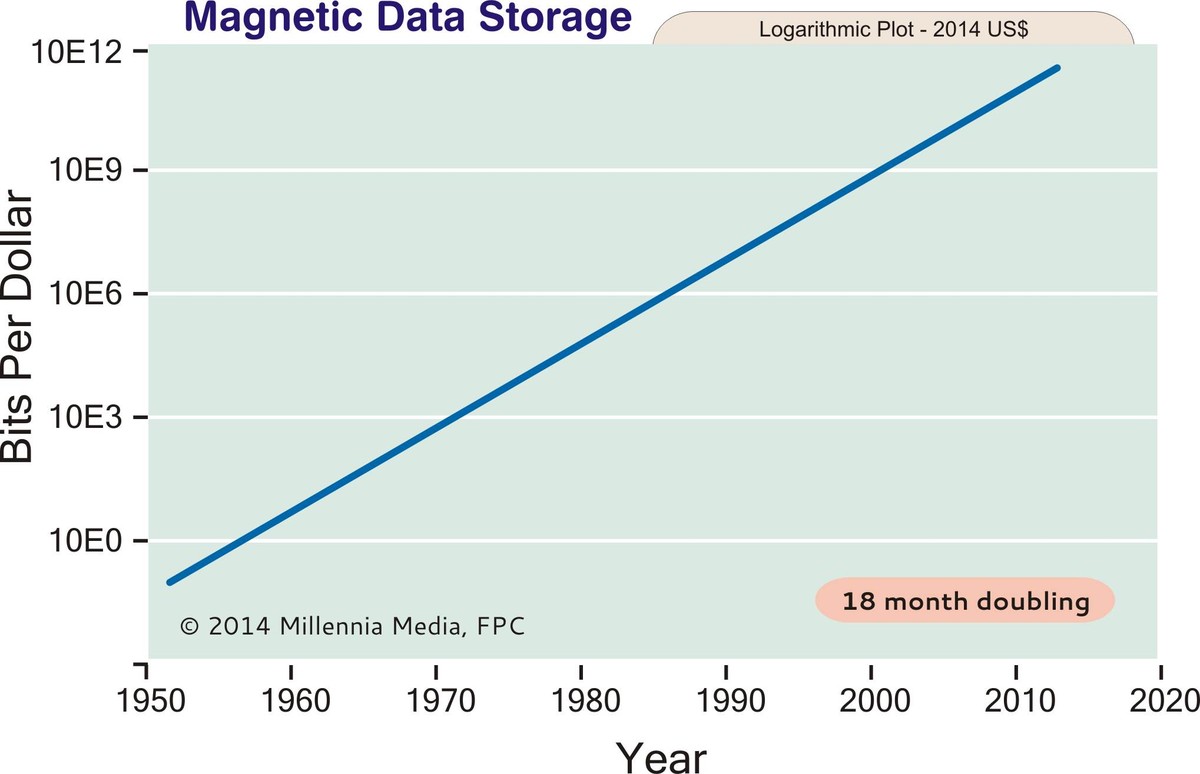

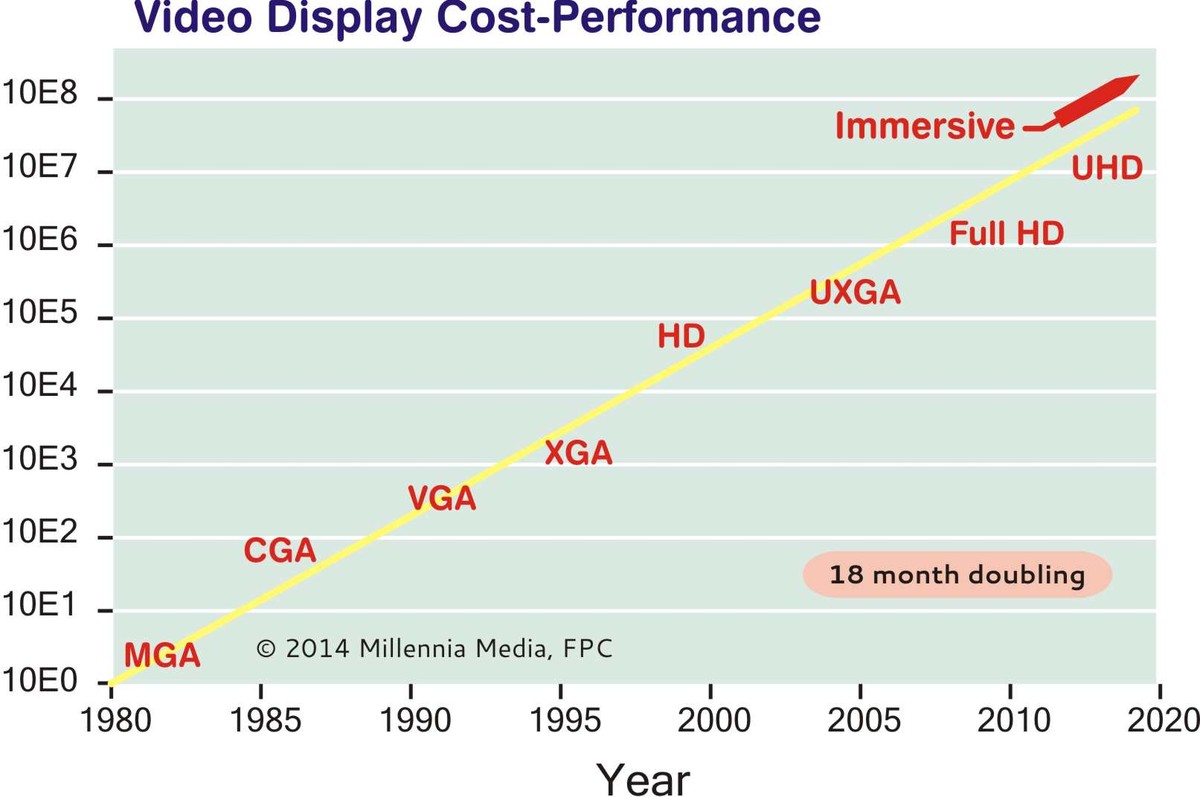

For example: since 1990, the cost-performance efficiency (CPE) of wireless devices has doubled every seven months. From 1980, the CPE of video display technology has doubled every 18 months. And since the early 1950s, magnetic storage bits-per-dollar has doubled every 18 months.

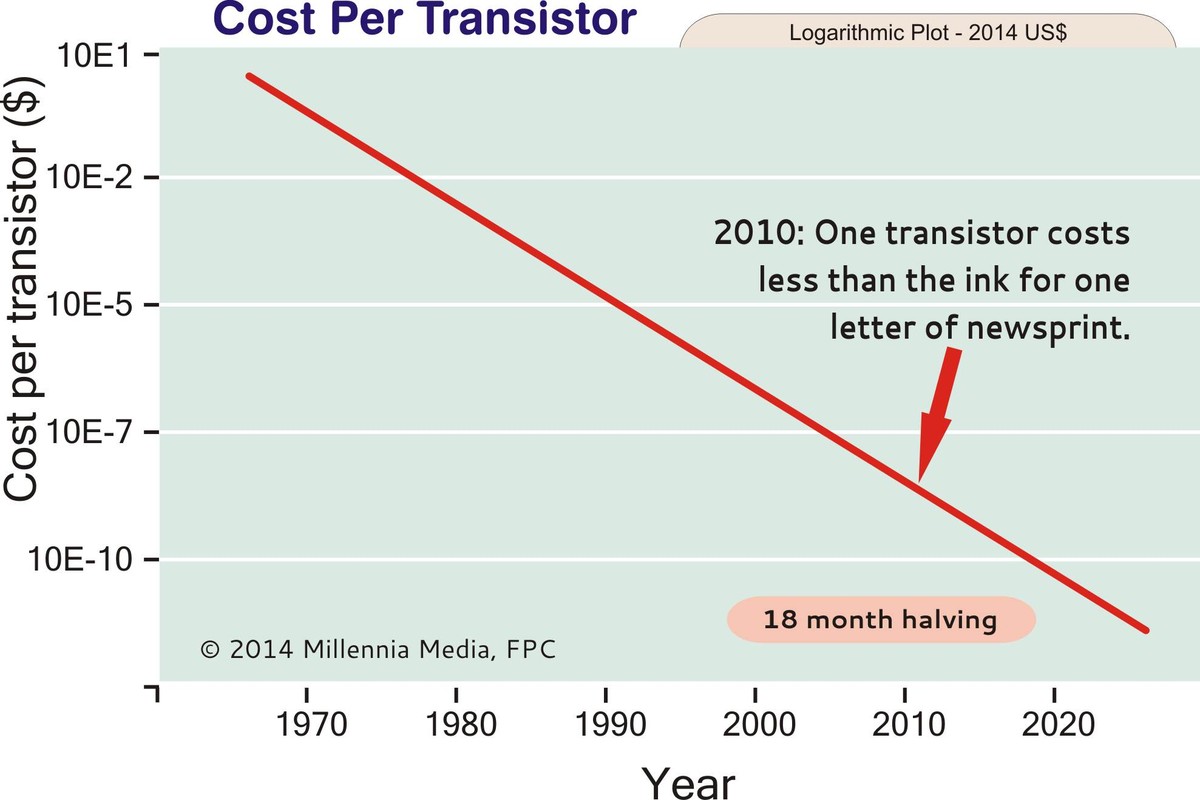

Since 1970, power consumption per data instruction has halved every 18 months. DNA sequencing cost has halved every ten months since 1990 (NEC is now shipping a portable crime-scene DNA analyzer that takes just 25 minutes). The cost of transistors has halved every 16 months since 1970. One transistor now costs less than the ink for one letter printed in Tape Op.

Similar CPE slopes are seen for dynamic RAM since 1970 (18-month doublings), calculations-per-second since 1950 (24-month doublings), MIPS-per-dollar since 1950 (22-month doublings), Internet global backbone bits-per-second (14-month doublings), Internet data traffic (7-month doublings), and growth in supercomputer FLOPS since 1990 (14-month doublings).

The list continues for scores of technologies, (especially!) including audio engineering technologies.

AUDIO DYNAMIC RANGE INNOVATIONS FOLLOW A TREND

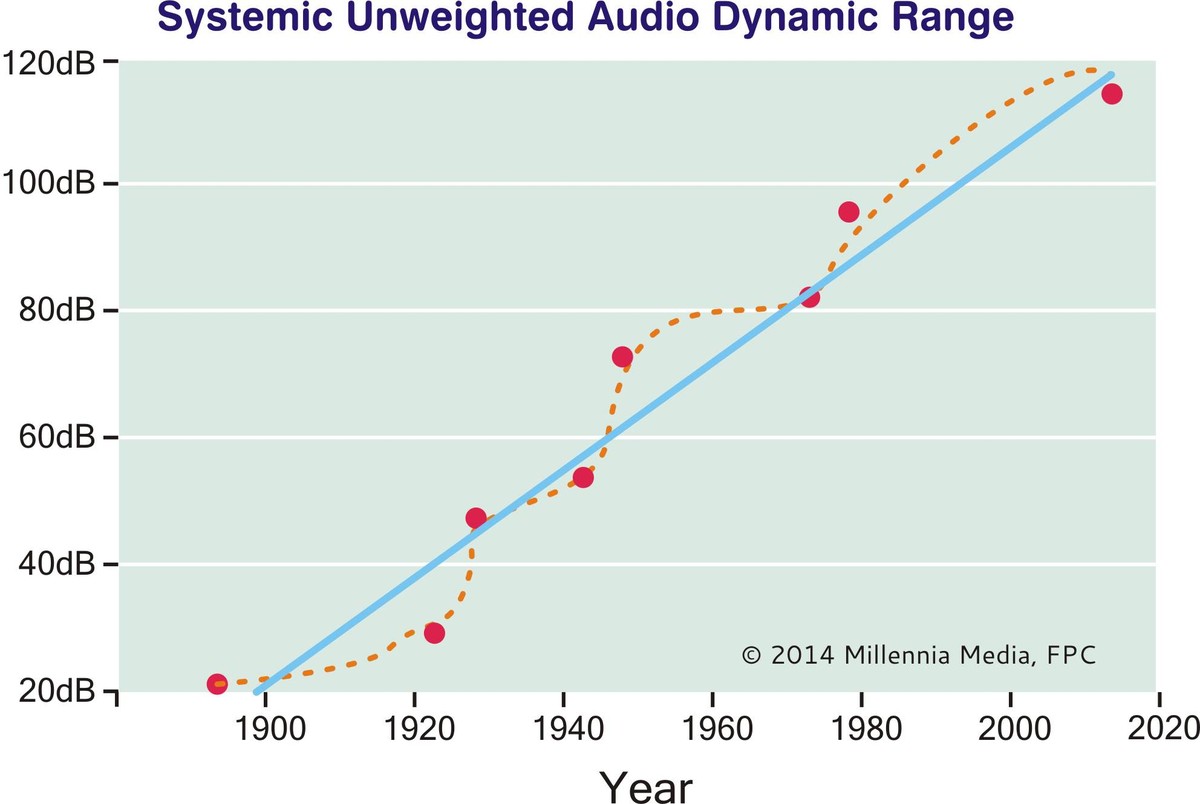

In the beginning of commercial recorded sound (1890), we achieved a systemic dynamic range of around 15 dB (3 bits equivalent). By the 1930s, vacuum tubes, condenser mics, and electric cutter heads improved dynamic range to around 35 dB (6 bits). Magnetic tape gave us a 60-70 dB range and more, especially once technologies like Dolby SR were available (12 bits). With the advent of commercial digital recording in the 1970s and '80s, early systems were capable of 90 dB dynamic range (15 bits).

Today, we're achieving a best-case unweighted systemic dynamic range of around 110-115 dB (19 bits) — from concert halls to home playback; but only under controlled, pristine lab-like conditions (a high-quality home system playing better-than-average program material is possibly delivering around 16 bits).

Let's visualize the history of audio dynamic range on a grownth chart.

Looking at technology growth with too narrow a time frame obscures the long-term trend. For instance, from 1885 through 1925, acoustic dynamic range didn't improve much — it took the breakthrough innovation of electric recording to significantly improve dynamic range. This is known as the "nested S-curve," or "step and wait" theory of growth. Also, economic incentive drives innovation and improvement. Generally, those technologies with the greatest economic incentives improve the fastest.

When we "average" (or "smooth") 120 years of dynamic range, we see that its growth slope is predictable. From the beginning of audio recording, commercial dynamic range has improved by roughly 0.8 dB per year — the equivalent of around one-bit every seven years. Thus, we can confidently extend our growth slope into the future, and expect the trend to continue... until real-world dynamic range is no longer limited by technical or economic factors in audio systems.

TRENDS PREDICT THE FUTURE

We've seen how and why technology advances and how we can confidently predict its growth over time. Let's now turn our attention to the next 40 years. And finally, let's attempt to anticipate the next two generations of audio engineering. It's important to recognize that the professional audio market will not be the primary driver of our future tools. The economic engines driving key changes in pro audio will be gaming, film, and television, as well as military — combined global revenue of over $500 billion. Pro audio will be the beneficiary of this massive innovation investment — what I call the "first person shooter era" of popular media.

Thus, to better understand the future of audio engineering, we need to explore a number of emerging technologies, as well as their possible futures over the next 40 years. Then we will converge our exploration into a singular vision for audio creation and delivery.

GESTURAL CONTROL

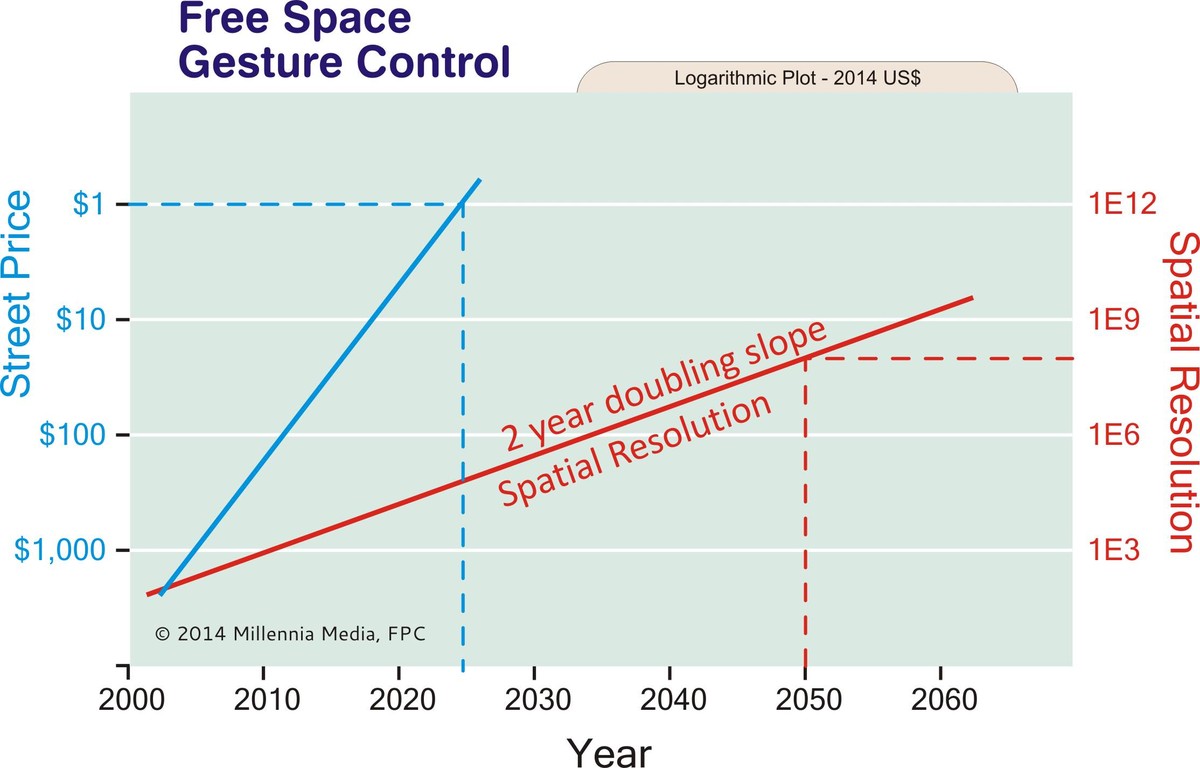

Remember the big gesture-controlled video screens in the film Minority Report? The actual technology would have likely cost more than $1 million in 2001. Today, we have $100 consumer gesture devices that do more than Tom Cruise could pull off in that film. Samsung televisions respond to hand gestures while you sit on the couch. Hewlett Packard notebooks are currently shipping with the Leap Hand Motion Controller (you can visit Leap's website and watch a video of its significant capabilities.). How soon will free-air gestural control replace the mouse? When will gestural control become the de facto human/machine interface?

Consider this: today a company called Microchip sells an e-field gestural control chip for about $4. That IC comes fully equipped with no fewer than five A to D converters, flash memory, as well as a powerful 32-bit DSP engine that interprets myriad forms of 3D human gestures, flicks, angulars, and symbolics. The chip has a 3D spatial resolution of 150 positions per inch, and can track at 200 positions per second. At $4 a chip, the migration from physical control to free-air control has begun. Single-finger moves, two-finger moves, different kinds of taps and swipes — our mobile devices and tablets have trained us well. We have become deeply familiar and entirely comfortable with gesture control on hard surfaces. The leap to free-air is a natural evolution. Early adopters are already replacing their mice and touch interfaces with gesture. How long before free-air gesture becomes the standard?

We conservatively assume that gesture technology resolution and accuracy will double every two years. Common gestural devices ($100 at 150 PPI by today's standards) will boast two orders of magnitude greater resolution by roughly 2025. Costing only a dollar, with 15,000 3D positions per inch, such devices will allow for much larger stages of freedom and movement. By approximately 2030 to 2035, sophisticated, high-resolution, free-air gestural control will be a mass-produced commodity. Will gesture replace touch screens and mice by 2035? No. But the transition will be well underway. The next 35 years of human-computer interaction are clearly free-space and gestural.

Now let's move on to 3D virtualization. We need to think systemically with video, audio, and head-motion-tracking all working seamlessly as a single component. Let's start with a look at virtualized audio.

SPHERICAL AUDIO

Both gaming and film are moving quickly into providing a sense of total audio immersion. In real acoustic spaces (movie theaters, etc.), we're seeing the delivery of spherical audio from emerging technologies like Dolby Atmos, DTS Neo, and Barco Auro. However, these immersive real-space technologies require more speakers and amplifiers, more expense, and a great deal more work to maintain — things that consumers embrace slowly, if at all. The average consumer has balked at 6 speakers. Requiring 10, 14, or 22 speakers (and amps) is a non-starter. Market realities suggest that the primary thrust of 3D audio innovation will occur over headphones.

Already, first-generation 3D headphone products such as DTS Headphone X are breaking ground today. Over the next decades, popular gaming and entertainment media will lead the relentless push towards fully immersive audio realism — predominantly over headphones.

Full-coverage headphones (not just ear buds) have exploded into mass consciousness in just the last few years. This is no accident or fluke, and the trend will continue to accelerate. Popular culture is becoming increasingly conditioned into accepting "cans" as a primary method of consuming audio.

Jimmy Iovine and Andre Young (Dr. Dre), the creators of Beats by Dre headphones, have arguably done more than anyone else to position headphones as a generational, cultural, and global-style statement. Beats is now selling well over $1 billion of consumer audio every year, and has captured (alas, created) over 60% of the above-$100 head-worn audio market. There are now entire stores devoted to head-worn audio technology.

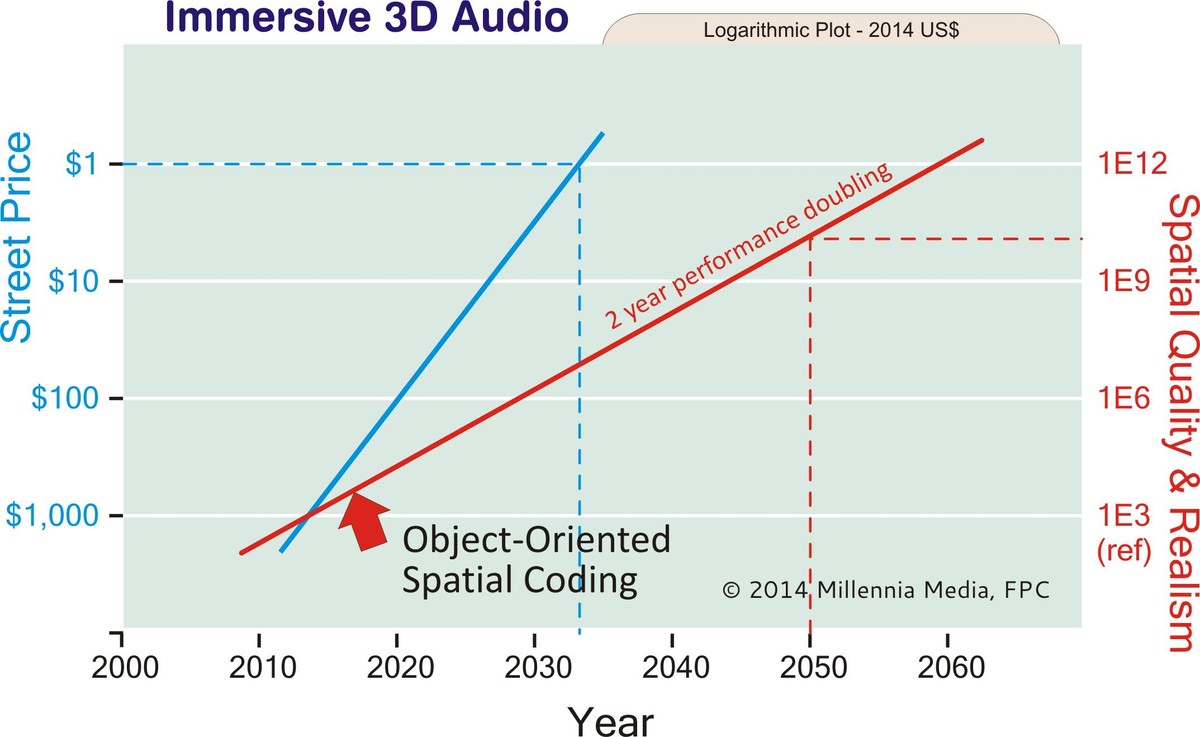

Over the next 20 to 30 years, 3D sound-field production and design will be one of the biggest growth areas in pro audio. Microphone designers, headphone makers, audio software engineers, and specialized post-production engineers will move from today's X-dot-X (5.1, etc.) paradigm to a seamlessly spherical, object-oriented sound field. If we plot a 3D audio growth chart with a two-year doubling projection, today's $1,000 3D audio solution will enjoy commodity pricing after 2025 combined with 100 times improvement in "spatial and timbral resolution experience" over headphones.

Conservatively, by 2030 we should realize highly realistic immersive audio as part of every low-cost portable device, gaming console, and home entertainment system. And by about 2040, on-ear audio should rival, or exceed, the subjective performance of today's best audiophile rooms and room speakers. Moreover, in a very short time (perhaps 2020?) common commercial music will be routinely mixed in full 3D immersion, and delivered in an open-source format (most likely a derivative of Atmos or Neo).

VIRTUALIZED VISUALS

Virtualized imagery plays a central role in the future of audio production. The future for head-worn visual displays is clear: higher resolution, finer dot pitch, better dynamic range, lower latency, and, of course, a relentless evolution towards three-axis immersion as our standard image format. By now, many of us have seen photos of the Google Glass prototype. Glass is a head-worn computer with a head-mounted display. Sources claim that Glass will be available in 2014 for a street price of around $1,500.

This is a true paradigm shift. If there were only one takeaway from our brief look into the future, it should be this: we are moving from a hand-held device culture to a head-worn device culture. It won't be long before smart mobile computers are designed into small, lightweight, head-worn devices not unlike Glass, except increasingly more powerful and ubiquitous. Vendors such as Samsung, Olympus, Microsoft, Oakley, Sony, Intel, and Apple — along with easily a dozen startups — are all reportedly developing head-worn smart devices.

While Google, and others, are defining the mainstream of head-worn gear, I think there's another kind of device that's more directly applicable to the future of audio and media production: gaming displays. And of all the gaming displays now in development, perhaps the Oculus Rift is amongst the most intriguing, with one discrete video display per eye for true 3D (resolution in development is 1080p). The Rift has unrestricted head-motion tracking: if you turn your head, the scene (both audio and visual) moves with you in lifelike, immersive realism.

Observing gamers using the Oculus Rift is like a window into the power of new display technology. To see what I mean, watch the YouTube video: "Oculus Rift Best and Funniest." What you will see is the most deeply convincing, fully immersive virtual-reality experience to date. The uncanny experiences of Rift reality might even be called "disturbing."

Oculus plans to ship their first commercially available product by the time you read this.

Let's return to our trend analysis: comprehensive video display cost-performance efficiency (CPE) since 1980 shows a doubling roughly every 18 months. Thus, by 2025 the CPE of immersive displays will be at least 100 times better — at a commodity entry point.

By 2035, immersive visuals will be at least 10,000 times more powerful than today. And by 2050, we can reasonably project that commodity-grade, head-worn virtuality will be nearly indistinguishable from what we see with our own eyes in real-space. We also know that head displays will be much smaller, and much lighter, perhaps using a technique called direct projection where images are projected (scanned) directly onto the human retina, one pixel at a time.

HEAD MOTION TRACKING

Immersive AV would not be possible if it did not "track" with natural head movements. When you turn your head, the virtual sound and picture must react like they would in real sensory space. Effective head tracking requires near-zero latency response, with high spatial resolution in all axes of head movement. Gestural control and head tracking technologies share many of the same design attributes, and they appear to be maturing at similar rates. Popular head motion tracking systems for gaming today cost around $200 and offer around 640x480 raw resolution, 100 frames-per-second sample rate, and under 10 mS response. Lab-grade units with better resolution and response are also available. Assuming a two-year doubling in cost-performance efficiency, high-resolution head-motion tracking should reach commodity status by 2025, offering a large field of use and near-imperceptible latency. By 2025, IC manufacturers will offer low-cost, second or third-generation silicon head-tracking solutions. And by 2035, ultra-high resolution, low-cost, head-motion tracking will be common on all VR devices.

CONVERGENCE

Now let's put all of this technology together. We begin to see that the era of virtual A/V post-production is not far off, and in some ways has already begun. Over the next three decades, all of the technologies we've just explored will converge into a singular pro audio ecosystem. As this happens the way we record, edit, mix, and master audio will radically change.

By 2050, post houses with giant mixing consoles, racks of outboard hardware and patch panels, video editing suites, box-bound audio monitors, touch screens, hardware input devices, and large acoustic control rooms will become historical curiosities. We will have long ago abandoned the mouse. DAW video screens will be largely obsolete. Save for a quiet cubicle and a comfortable chair, the large, hardware-cluttered "production studio" will be mostly a quaint memory. Real-space physicality (e.g., pro audio gear) will be replaced with increasingly sophisticated head-worn virtuality. Trend charts suggest that by 2050, head-worn audio and visual 3D realism will be virtually indistinguishable from real-space. Microphones, cameras, and other front-end capture devices will become 360-degree spatial devices. Post-production will routinely mix, edit, sweeten, and master in head-worn immersion.

During this transition, perhaps the only remaining piece of CEH (clunky external hardware) will be the sub-woofer, which cannot be emulated with a head-worn device. In live environments, such as movie theatres and concerts, patrons will be given spherical augmentation headsets similar to the 3D handouts in today's theatres. Theatres will be smaller, but will deliver a total sensory-immersive experience using sub-frequencies, physical agitation, and head-worn AV. Technology charts show that electro-mechanical devices have been halving in size every 2.5 years for the last 50 years. This suggests that head-worn A/V immersion hardware will continue to shrink in size and weight as it becomes more resolvent. It's not a stretch to envision ultra-lightweight "transparent headgear " which keeps eyes and ears fully open to one's real-space environment, while providing immersive qualities on demand. Transparent headgear solves the problem of socializing and sharing with others in a common A/V space.

Future recording studios will give us our familiar working tools: mixing consoles, outboard equipment, patchbays, DAW data screens, and boxy audio monitors. The difference is that all of this "equipment" will exist in virtual space. When we don our VR headgear, everything required for audio or visual production is there "in front of us" with lifelike realism. In the virtual studio, every functional piece of audio gear — every knob, fader, switch, screen, waveform, plug-in, meter, and patch point — will be visible and gesture-controllable entirely in immersive space. Music post-production will no longer be subject to variable room acoustics. A recording's spatial and timbral qualities will remain consistent among any studio in the world, because the "studio" will be sitting on one's head. Forget the classic mix room monitor array with big soffits, bookshelves, and Auratones. Head-worn A/V allows the audio engineer to emulate and audition virtually any monitor environment, including any known real-space or legacy playback system, from any position in any physical room.

Matured gestural control (2035+) will allow us to reach out and control any "thing" in the studio. Ergonomic efficiency will be vastly improved with scalable depth-of-field. In other words, whatever function you want to control comes to you. Haptic touch (emulated physical feedback) will add an extra layer of realism (2030+). Any device in the virtual studio can be changed with one voice or gestural command. Don't like the sound of that Helios console? Install The Beatles' EMI Abbey Road console. Prefer a Millennia NSEQ-4 over the NSEQ-2? Any change becomes quickly engaged for fast A-B comparisons.

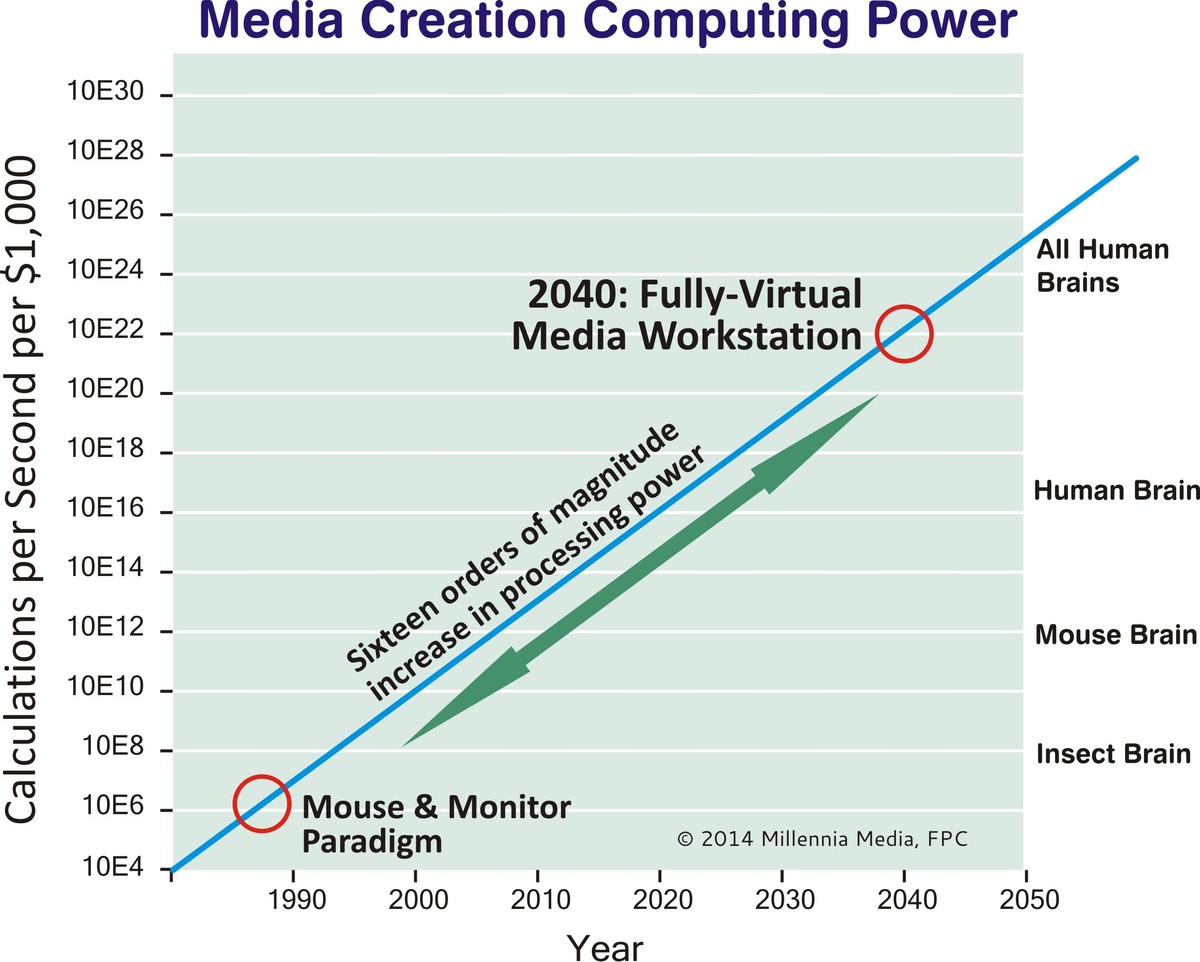

PUSHING THE BOUNDARIES

Today, a $400 Sony PS4 employs around 5 billion transistors, with 2 teraflop graphics processing — about the same computing power as one mouse brain (according to inventor and futurist Ray Kurzweil). By 2027 a commodity gaming console will be 10,000 times more powerful than today's machines. That's the processing power of a human brain sitting on your desktop — roughly equivalent to the power of IBM's Watson supercomputer in 2010. With effectively unlimited processing power, and profoundly advanced AI, our future production tools will allow us to call up a visually and musically correct symphony orchestra in any concert hall of our choosing. Let's add a Bulgarian choir, or maybe Yo Yo Ma on his carbon fiber cello. Immersive media creation systems will allow us to organically develop our own musical ideas by intelligently interacting with each desk of a symphony orchestra, or a gamelan orchestra, or a rock band — or whatever — of any size, and in any space (assuming our desired players, instruments, and acoustic spaces have been characterized). Gestural and voice commands will make refinements to the score and performance, just as a conductor or producer would rehearse real-space talent, until the ensemble plays exactly as we envision. Our technology growth charts suggest that by 2040, we will achieve all of this (and perhaps more) in a VMW — Virtual Media Workstation.

The future of audio, music, film making, game design, TV, industrial apps — any creative media — becomes effectively unbounded. Personally, I dream about recording music directly from my thoughts: a non-invasive brain-machine music interface. It turns out that this dream is moving from science fiction to reality. And if we chart a conservative two-year doubling period for cortex sensing resolution, by the early 22nd century our non-invasive brain interfaces will be about 20 orders of magnitude more powerful than today. Will high resolution brain sensing truly give us the ability to think music and imagery directly into our computers? Or does this simply blur the line between our brains and our computers, so that the entire paradigm of augmented thinking and collective knowledge is radically shifted? At that point — when we have billions of devices globally networked, and each device is trillions of times smarter than the combined intelligence of all humanity - what will our species become? What will our collective thought processes look like? Will our future notions of "art" resemble the forms of art we cherish today?

The machine is getting closer to us. Our creations are becoming more lifelike, more like us. Machines were once "out there" as separate ideas doing separate work. Moving forward, smart machines will become an increasingly intimate part of daily life; they'll exist on our heads, closer to our senses, and in the infrastructure of our homes, vehicles, and businesses. I find this both exciting and frightening as radically new opportunities, and profound social challenges, await us.

One hundred years ago, Oscar Wilde noted that life imitates art. Today, technology mimics science fiction. In the not-too-distant future audio engineers will have a Holodeck on their head. The future of music, audio, filmmaking, gaming — any creative media construction, from inception, to post-production, to delivery — is truly boundless and limited only to our collective imagination.