The U.S. National Transportation Safety Board (NTSB) has completed its investigation into a fatal crash involving a semi-truck and a Tesla Model S utilizing automated driving systems. The reasons for the crash are complex, but the report highlights issues with self-driving vehicles that should be of concern.

The incident happened in May of 2016 in Florida. It gained wide media attention because the fatality in the wreck was the driver of a Tesla Model S who was using the car's "Autopilot" semi-automated driving system. Blame for the wreck has been bandied about, thrown at both the commercial vehicle's driver and the Tesla driver. Based on evidence from the crash, the NTSB's report blames both drivers and the way Tesla's Autopilot handled the situation.

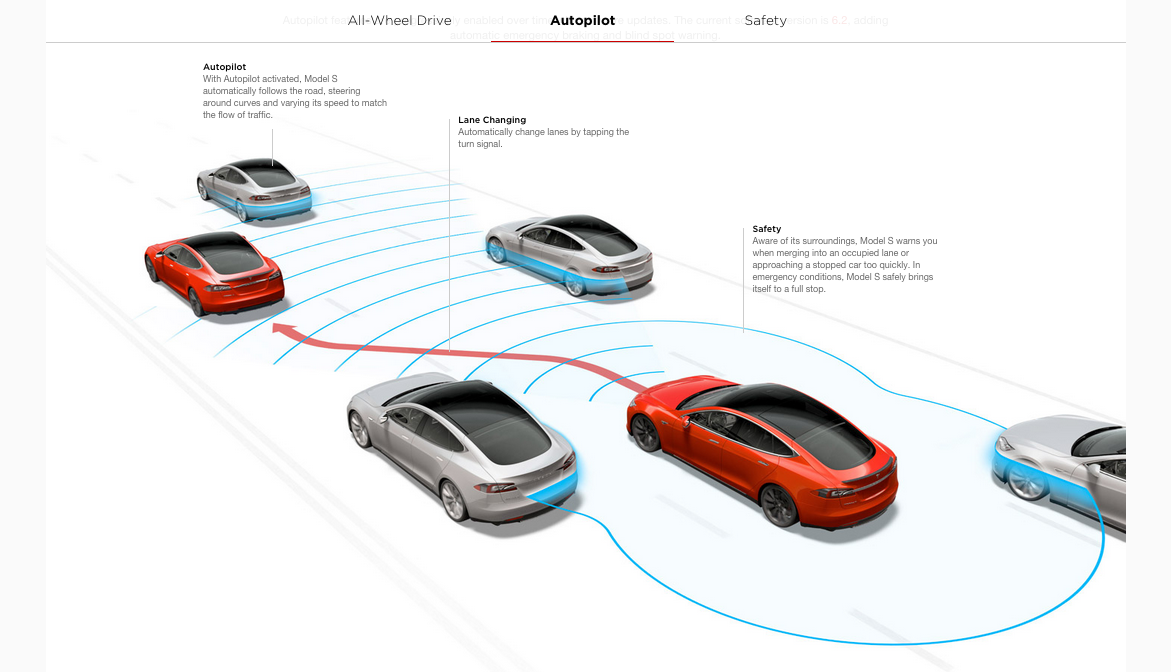

Tesla Motors has taken a lot of flak for the name of its system and for its reliance on small print to explain that it is not, in fact, a fully autonomous driving system as the name might imply. To the company's credit, though, it has revised much of its marketing and has now changed the software that controls the Autopilot system, which the NTSB report noted.

Yet blame for the crash itself is not terribly important. What's more important is what can be learned from it. Namely some of the inherent dangers in autonomous vehicles, our perception of them, and how they'll function in a world with mixed human and computer drivers on the road. The near-future of vehicle automation is going to determine what the public's perception of self-driving vehicles is for some time.

In the NTSB's report on the fatal Tesla crash, the blame was placed on the driver of the semi-truck, the Tesla driver, and the car's automated systems. All three drivers (truck driver, car driver, and computer) made serious mistakes that ultimately lead to the accident.

The semi-truck driver did not yield proper right of way, causing the big rig to move in front of the Tesla unexpectedly. The driver of the Model S was not paying attention to the road at all, relying solely on the automated driving systems in the car. The Autopilot system was not designed for fully automated driving and had no way of "seeing" the oncoming crash due to limitations in its sensor setup. Nor was the Tesla adequately engaging the driver with warnings about his inattention to the road or the task of driving.

So the crash proceeded as follows: the truck driver failed to yield right of way and entered the Tesla's path as it proceeded forward. The only indication of possible impairment to the truck driver was a trace of marijuana in the driver's blood, but no other distractions were found in the investigation.

Meanwhile, the Model S driver was not paying attention to the road at all, though what exactly the driver was doing is undetermined. The driver's cause of death was definitely crash-related, however, indicating that the driver did not suffer a medical emergency or other problem that could have led to the incident. The driver had a history, according to the Tesla's recording software, of misusing the Autopilot system in this way.

The Tesla Model S' Autopilot system had alerted the driver several times to his inattention, but had not taken further lengths or, the NTSB found, done enough to adequately prevent the driver from relinquishing all control to the car. Furthermore, the sensors and systems on board the Model S were not capable of registering the truck or its potential (and eventual) crossing of the car's path and thus did not engage emergency braking or avoidance maneuvers. That latter part attests to the often misunderstood nature of today's semi-automated driving systems.

From these facts, the NTSB listed several recommendations for semi-automated vehicles to meet. In its own investigation into the crash and with early input from the NTSB, Tesla found problems with the Autopilot driver inattention warning system, and has since taken steps to remedy them. Tesla Motors has also revised most of its current marketing materials to further emphasize that the Autopilot system is not a fully-automated driving system capable of completely autonomous vehicle operation and that drivers are still required to be engaged in driving even when Autopilot is activated.

The NTSB is recommending that manufacturers put restrictions in place to keep semi-automated vehicle control systems working within the confines of their design conditions to prevent drivers from misusing them. This would mean that a semi-automated vehicle whose automation is designed for use during commutes at highway speeds would need to not operate at speeds lower than that and would not function in driving situations where the reading of road signs or compliance with pedestrian crossings and the like are required.

Today, most semi-automated driving systems being used at the consumer level are based around adaptive cruise control designs. These are made to watch traffic on a freeway or highway, where multiple lanes are available, but cross-traffic and pedestrians do not exist. These systems commonly require the driver to have hands on the steering wheel at all times and are often now augmented by "driver awareness" indicators that measure how attentive the driver is. Most work by gauging the driver's ability to keep the vehicle within its lane without assistance. Some also work by noting the driver's head position, input to the steering wheel, and position in the seat.

The NTSB also called for vehicle event data to be captured in all semi-automated vehicles and made available in standard formats so investigators can more easily use them. They called for manufacturers to incorporate robust system safeguards to limit the automated control systems' use, and they called for the development of applications to more effectively sense the driver's level of engagement.

The NTSB also asked manufacturers to more closely report incidents involving semi-automated vehicle control systems. These recommendations were issued to the National Highway Traffic Safety Administration, the U.S. Department of Transportation, the Alliance of Automobile Manufacturers, the Global Automakers group, and to individual manufacturers designing and implementing autonomous vehicle technologies.

With the release of the NTSB's summary report today, the U.S. Department of Transportation also released its own guidance on automated driving systems. These federal guidelines are given as suggestions that vehicle manufacturers are asked to voluntarily follow.

Source: NTSB